Using product principles to navigate tensions in digital mental health design.

By Maria Lyons and Jacinta Jardine

When it comes to digital mental health interventions, user engagement has been an area of focus since the birth of the field, and it is likely to always be a topic of concern. The simple fact is that it is hard to get people to engage with any mental health intervention; digital solutions are no exception.

A recent machine learning (ML) study, involving data from 46,313 SilverCloud users, identified five engagement classes: low engagers, late engagers, high engagers with rapid disengagement, high engagers with moderate decrease, and highest engagers; highest engagers made up only 10.6% of the sample.

These findings are consistent with recent reviews of mental health apps; one review of 93 apps found that the median 15-day retention rate was just 3.9%. It is apparent then, why engagement is an ever-present topic of interest for researchers and designers alike.

But what is engagement?

In most research studies, engagement is measured solely by usage metrics. While higher usage of a therapeutic product, in theory a larger ‘dose’, usually leads to better outcomes (a number of recent trials including the ML one mentioned above attest to this), we know that this rule of thumb should be interpreted with caution.

For example, hypothetically we could add anxiety-inducing, addictive or gamified features to an app, bombard the user with incessant notifications, or provide extrinsic rewards for progress, all of which would increase ‘engagement’, while at the same time lowering the user’s wellbeing, autonomy and self-motivation.

| A lot of misunderstandings can occur around engagement |

It is clear from this example that a lot of misunderstandings can occur around engagement due to inconsistent or ambiguous terminology. People frequently use the term engagement when what they really mean is usage, i.e. how many times a user logs in, how many minutes they spend in the platform, how much content they view. These are concrete points that we can measure easily.

Cognitive or emotional engagement with the therapeutic content of the intervention, on the other hand, exists on a continuum, from shallow and fleeting to deep and meaningful, and is not as easy to measure. Therein lies the problem.

How we measure engagement

At SilverCloud, we rely on user outcome measures (whether the user is feeling better) to infer meaningful engagement with the intervention’s therapeutic content. We also aim to qualify engagement with feedback from our users, to add context and depth to quantitative usage and outcome data. For example, a recent qualitative study of SilverCloud users found that people were still using certain CBT skills, such as cognitive restructuring, long after their usage of the platform had ended.

This long-term skill usage is one of the core goals of CBT and our product at SilverCloud; our programs are designed to promote self-management and device independence. We want our users to learn skills and build heuristics to help them stay mentally healthy for the rest of their lives. We want them to use SilverCloud, get what they need from it, and be able to take that knowledge out into the world.

The Engagement Dilemma

As a product team, we try to hold on to these core goals, but we know that we exist within a voracious economy of attention, where the energy and interest of individuals have become a commodity. We are also aware that digital overload is proliferating as the health app market floods, meaning that both surface-level attention engagement and deeper level therapeutic engagement are becoming simultaneously harder to attain and more sought after.

| Engagement is becoming simultaneously harder to attain and more sought after |

We use a number of tools to help us navigate the complex thicket of tensions that surround engagement. These include our engagement strategy (Supportive, Interactive, Personalized, Social) which is embedded within the design of our platform and our ethos as a product team, and theories such as Self-Determination Theory (SDT), which states that autonomy, competence, and relatedness are the three key drivers of human motivation and wellbeing.

These theoretical underpinnings are our roots, they keep us stable as we balance competing priorities, opinions, and requirements. It is from these roots that we developed our product principles.

-3.png?width=1077&name=MicrosoftTeams-image%20(6)-3.png) To find out more about how we came up with these principles, check out our Developing Product Principles for Digital Mental Health blog.

To find out more about how we came up with these principles, check out our Developing Product Principles for Digital Mental Health blog.

Using Product Principles to Guide Decisions

In order to explain how we use our product principles to make decisions, we have taken the framework of our engagement strategy and identified key engagement-related tensions that we need to balance on a regular basis. These case studies show how principles can be used as practical tools across all aspects of a product team.

Supportive

Tension to balance: cost vs. effectiveness

Using human supporters or coaches in digital mental health interventions is more expensive than simply providing the intervention unsupported, or as “self-help”. As well as this economic burden, there are additional burdens of administration, risk management, supervision and training. However, human support has been shown to have a significant impact on the effectiveness of digital mental health interventions, particularly for those people experiencing moderate to severe distress.

Principles used to make decisions: Convey warmth, Ensure ease of access

Human support has been an integral component of the SilverCloud model since its inception. While we recognize that there are certain situations where our programs function well self-guided (e.g. as a response to the COVID-19 pandemic, we released a short program primarily designed for wide dissemination and unsupported use – the goal was to reach as many people as possible and provide simple tools for coping with such an unprecedented and distressing situation), we believe that in general, keeping a human in the loop is core to the effectiveness of our product.

That being said, we also try to remain flexible and open to the preferences of our users. We have recently been exploring a number of different support models, including opt-in support, having an initial supported touchpoint, and blended models for more severe cases.

Our opt-in support model offers users the option of support right from the beginning of their journey. This offer is kept open, so that users can opt-in at any time. Our pilots with this model have shown that even in cases where users did not reach out for support, they found it reassuring to know they could contact someone if they needed it.

Our blended models include using SilverCloud as a waitlist preparatory intervention, in tandem with face-to-face care, and as aftercare or relapse prevention. In the combined face-to-face and digital use case, we have found that having digital tools can help users reflect on what they did during face-to-face sessions and reinforce learnings. It can also help to introduce topics ahead of time, so that users come to a session with an understanding of the subject and questions for their therapist, giving the therapist more time to focus on providing individualized, therapeutic support.

In summary: We try to keep a human in the loop as best practice for our intervention, but we also strive for flexibility when it comes to support models.

Interactive

Tension to balance: development time vs. usefulness for the user

Providing opportunities for interaction and active learning is an effective engagement and knowledge retention strategy across all learning environments. It is also a key part of CBT – skills such as goal setting and cognitive restructuring need to be applied to an individual’s own life and personal experiences in order to really make sense for them.

Interactive tools help to take CBT concepts and techniques off the page, bringing them to life with tangibility and personal relevance. Yet interactive tools require significant development resources, particularly when it comes to idiosyncratic or one-off designs.

Principles used to make decisions: Facilitate change, Be consistent

We go through a constant process of exploration when it comes to the therapeutic tools we develop and their necessity for the user. We include a lot of tools in our programs, but we rarely build elaborate and flashy ones, focusing instead on the simplest interaction the user needs to engage with the concept, put it into practice, or make it relevant to their own life.

Each of our tools is accompanied by a ‘paper tool’ version, a simple printable PDF worksheet, giving the user choice between digital and analog interaction. Something that surprises many technophiles is that people actually use these paper tools; we had qualitative feedback in a recent study that one user even laminated a paper tool and kept it in their bag for easy access. Other users reported downloading and screenshotting content or tools to their phones to use outside of the platform. The core idea behind our use of interactive tools is that they empower the user to take action; once we make this our primary focus, the technology becomes a secondary concern.

Consistency in the use of tool interactions is another interesting area that helps us reduce development time, while building feelings of competence in our users. We have spent a lot of time talking with clinicians about how CBT exercises are done face-to-face, and for the most part it is with pen and paper.

Many of the core exercises follow similar patterns and structures, so we have developed templates that we can reuse, reducing development time and creating consistency and familiarity for the user. The content of these exercises can be quite challenging, so by using simple, repeated interactions, we allow the user to focus on the therapeutic objective, rather than using energy trying to understand how to use each new tool.

In summary: We build lots of tools, but we keep them simple and consistent, always considering the content and purpose of the tool ahead of the technology.

Personalized

Tension to balance: autonomy vs. direction

Giving a user too much choice is a serious design taboo, nobody wants to overwhelm their user with endless options (FOBO is real). This is even more of a concern when your user might already be experiencing psychological distress. The alternative is to direct your user down a path that you know is a good one, from experience with other users or from previous research. This works to a certain extent, but there is still a chance that this path might not be right for every user.

Automated personalization through artificial intelligence (AI) i.e. where an algorithm selects a path based on data gathered about the user, seems like a good solution here, but as with the issue around usage and engagement, it also needs to be approached with caution. If Spotify recommends a song you don’t like, there is no harm done. However, creating incorrect profiles and recommendations for someone in distress could have serious consequences.

Despite the increasing use of AI for personalization in the mental healthcare context, research on this subject is scarce. This is unusual given the widespread knowledge that AI is a tool with many associated risks, e.g. profiling and perpetuating discrimination.

A recent study added an interesting layer to the personalization paradox by using fake AI guidance, which was in fact merely a randomly generated selection. They found that while users reported a preference for ‘automated guidance’, their behavioural data indicated that a mix of autonomous choice and guidance led to higher engagement. This shows how personalization is as much about the user’s perception of autonomy as it is about access to appropriate content.

Principles used to make decisions: Keep it simple, Include everyone

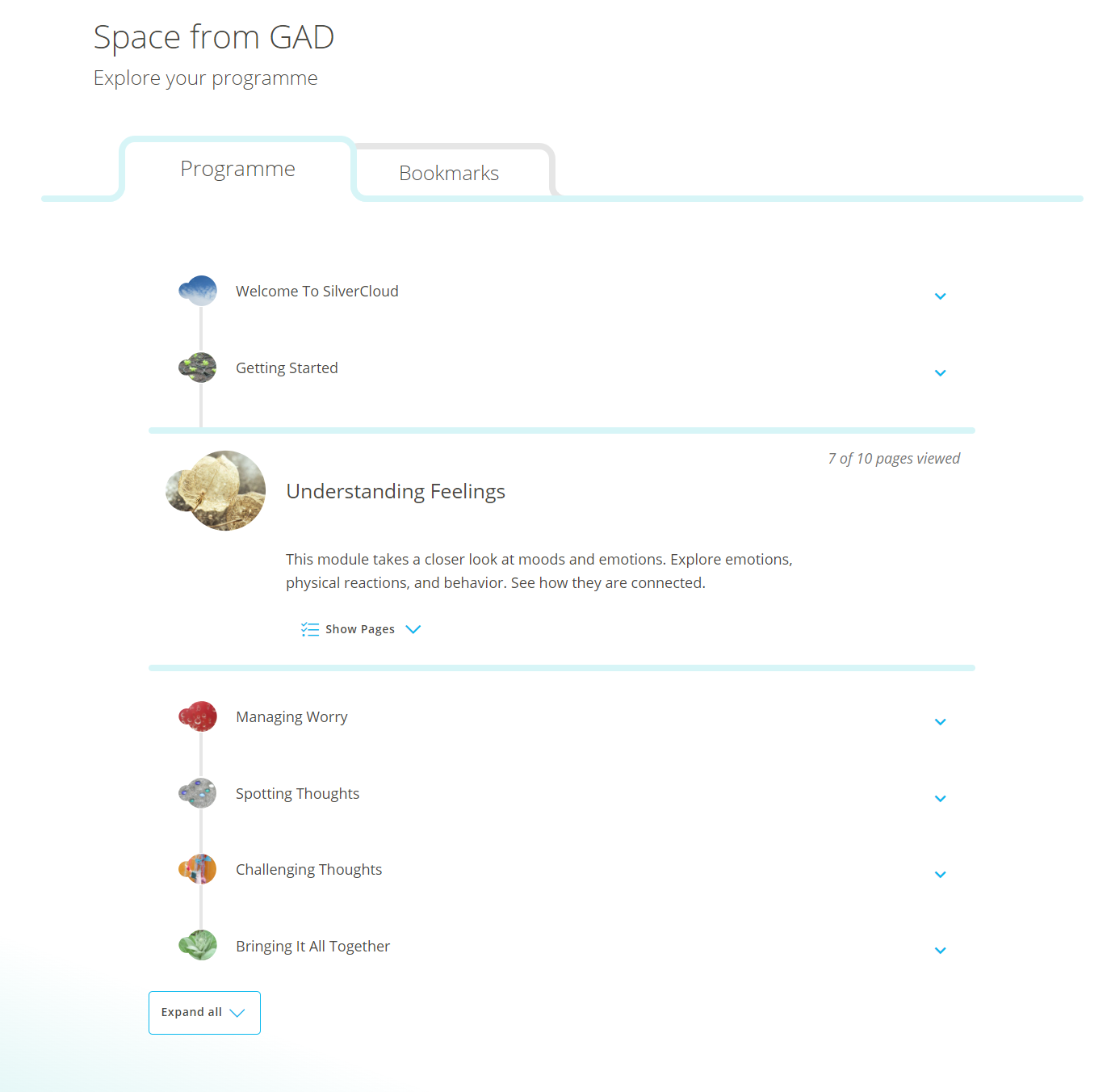

We opt for simplicity in our approach to personalization. We guide users through our programs, not with force or restrictions, but with careful placement of elements and layout of our UI. Our program modules are displayed in a linear fashion, with content that builds from one module to the next, but at the same time we give the user total freedom in where they go and what they do next.

For those users who love to roam and explore, no page is blocked; they have complete control and can wander within the programs as they please. At the same time, for those users who desire structure and focus, we have designed a clear ‘path of least resistance’ that guides them through a logical progression of content, building in complexity.

Program page showing the linear module structure

We also use our wonderful human supporters as personalizers, as they are best positioned to present the right thing at the right time for each individual user. Supporters have the ability to recommend and directly link to any piece of content or tool in the user’s program; they can also unlock additional content for users. Having these recommendations come from the supporter gives them more weight, and also reinforces the human element, confirming for the user that there is really someone on the other side listening to them.

When it comes to AI, we have avoided using it directly with our users because not enough is known about the complex risks associated with digital discrimination. Instead we decided, in collaboration with Microsoft, to use AI as a tool to help our supporters understand how their clients are progressing and how likely they are to get better, so they can further tailor their feedback. In this way we ensure that any AI-generated information has a human gatekeeper.

In summary: We give users both a ‘path of least resistance’ and the ability to customize their own journey, opting for user autonomy over AI decision making.

Social

Tension to balance: relatedness vs. safety

Drawing again from SDT, but also from the abundance of research literature on the topic, we know that human connections are crucial to motivation. In traditional therapy this comes in the form of the therapist-patient relationship, but with digital interventions opportunities for relationship building and social connections are harder to come by.

They are also somewhat contentious, particularly in the realm of peer-to-peer support, because the wellbeing of potentially vulnerable users is being placed in the hands of unknown sources. Peer forums and support systems require moderation and consideration of risk protocols in order to function safely, with the best interests of all users at heart.

Principles used to make decisions: Prioritize safety & security, Convey warmth

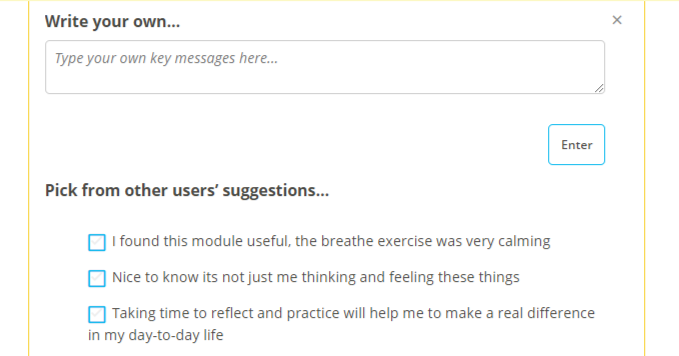

We place a huge weight on the safety of our users at SilverCloud, so we have opted for a compromise when it comes to social and peer connections within our platform. We have incorporated a number of social features that allow users to see that they are not alone, that other people are using the platform too. These include anonymous usage indicators (likes), as well as anonymous suggestions from other users within certain open-text tools.

Example of anonymous suggestions from other users

We include personal stories in every program we create, which function as another connection point to enhance relatedness, but also as an alternative voice for content delivery and a practical how-to guide for putting skills into practice. Our human supporters are also a key part of our social strategy; studies have shown that user ratings of therapeutic relationships built in a purely online context are comparable to face-to-face therapy.

Another core aspect of relatedness is the ability to help other people. We recently developed our first program with this in mind – a program to help parents of anxious children, both in learning how to build positive coping strategies with their child, but also how to handle their own emotions in response to their child’s anxiety. This sort of ‘train the trainer’ approach holds a lot of promise in a world where stigma prevents people from seeking help for themselves and individualism propagates when opportunities for connection are scarce.

In summary: We use multiple, low-risk social elements that help users to feel connected, while keeping them safe.

Conclusion

Nowhere are the ethical concerns around designing for mental healthcare more apparent than in the realm of user engagement. Many digital products on the market focus on hooking attention and exploiting our cognitive biases as a way to drive engagement. We know the detrimental effect this can have on a person’s wellbeing, which is the very thing we are trying to improve.

As designers, we have a responsibility to think carefully about what we are designing and the implications of our products for the people using them. We have a responsibility to fight for the voice of the user, to prioritize their needs amidst competing demands. For that reason, the quality of a user’s engagement means more to us than the quantity.

| there is never a ‘right’ answer |

When it comes to engagement, having a clear, shared ethos as a team and set of grounded product principles is essential. We know that there is never a ‘right’ answer to the many-layered challenges we face when designing engaging digital mental health interventions; each solution comes with consideration of multiple tensions and there are benefits and disadvantages to all available choices.

Product principles serve as an ethical guide when navigating these decisions, as a collective moral compass. They function as personal values do in guiding you to make decisions in your life. When at a crossroads, you look to your values, to what is most important to you, and the way becomes clear. The same goes for product principles.

About the Authors

About the Authors

Jacinta Jardine is a Product Innovation Researcher and PhD student in Human-Computer Interaction with Trinity College Dublin and SilverCloud Health. Her research is focused on digital ‘readiness for change’ interventions, which aim to prepare clients for online therapy.

Maria Lyons is a User Experience Designer at SilverCloud Health. She is interested in improving the digital experience for people with mental health difficulties through human-centred design.