This series of blogs will explore the relevance of implementation science to internet-delivered interventions, and will provide a high level overview of the field. The first blog in the series provided a short introduction to the topic, and this second will explore some of the outcomes relevant to an implementation of internet-delivered cognitive behaviour therapy (iCBT) in a healthcare system.

The Patient Health Questionnaire and the Generalised Anxiety Disorder inventory are examples of psychometric assessments used to quantify treatment outcomes of internet delivered cognitive behaviour therapy (iCBT). These measures, designed to assess specific constructs, are deemed reliable and valid when they allow clinicians and researchers to be confident that they are capturing the construct that they intend to measure, and that similar findings can be obtained across different cases and contexts.

Reliability:

the extent to which results from a measure are consistent across time, cases and contexts.

Validity:

Does the measure test what it intends to?

Does it measure all angles of a given construct?

Does it correlate well with similar measures?

These are questions of validity.

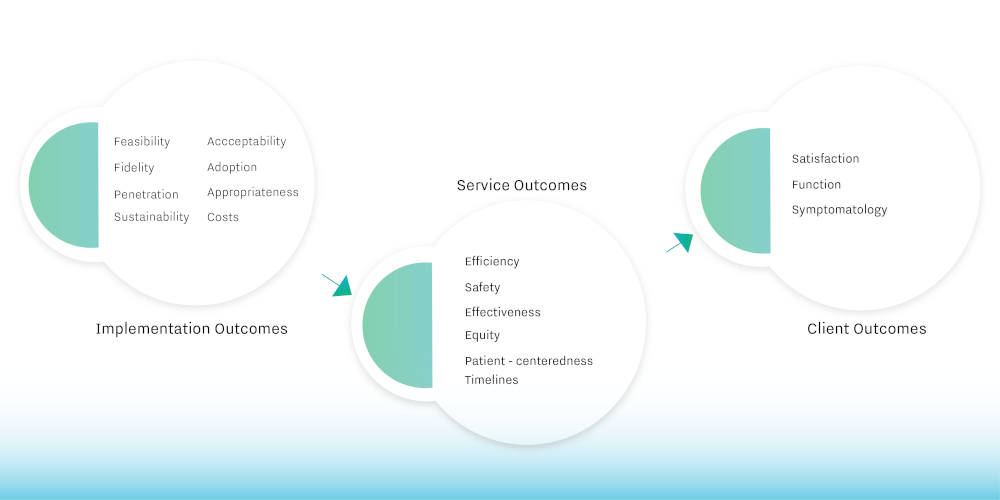

Similar to the mental health outcomes we are familiar with, we can also collect outcome data across several domains that allow us to interpret the implementation of new interventions and measure their success.

Within the field of implementation science, the most commonly referenced outcomes of importance come from the work of Proctor and colleagues (2009; 2011). These were proposed as part of a conceptual model of implementation to “intellectually coalesce the field and guide implementation research”. The outcomes are acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration and sustainability. In developing these, the researchers acknowledged the importance of implementation science, but also realised that without base outcomes to focus on when conducting research, there would be no coherent effort to guide and develop the field of implementation. These outcomes come before and influence both service and client outcomes, and logically so; if an implementation is conducted poorly, it is unlikely to benefit the services administering it and those receiving it.

(Table taken from Proctor et al., 2011)

Measuring these outcomes throughout the course of an implementation can provide valuable knowledge that, in some cases, can greatly influence the probability of the intervention integrating with service-as-usual. These outcomes are also proposed to be salient at different times during the implementation process. The following examples will illustrate the benefits of measuring an implementation.

- Collecting information on levels of acceptability while training clinicians in the use of an iCBT intervention may highlight a lack of “buy-in”. This can originate from a lack of knowledge of the literature base on iCBT, lack of credibility in the recovery rates that iCBT produces, or lack of knowledge about digital therapies in general. With this information, the training plan may be tailored further depending on the issues that arise

- Monitoring fidelity throughout an implementation can provide valuable insight into the real-life use of the intervention. When planning to implement an iCBT intervention, sometimes services prospectively generate an ideal model of iCBT delivery to patients. However, monitoring fidelity to this ideal model of delivery may uncover that the intervention has adapted in unforeseen ways to a dynamic service context, leading to a reiteration of the ideal model of delivery.

- A sustainability analysis after several months of working with a new iCBT intervention can detail the negative aspects of working with the intervention that need to be addressed before it is fully integrated into the provision of a normal service. For example, the implementation may have gone generally well, but a solid embedding of the intervention within service pathways may need revision of workflows or increased managerial support

Although progress has been made to highlight the importance of these outcomes through the literature base, a recent systematic review by Lewis and colleagues (2015) has highlighted the poor state of measures associated with the eight implementation outcomes. Out of 104 questionnaires used in various implementation studies, only 1 measure was found to have a sufficient standard of psychometric reliability and validity. The lack of research evidence for measures implementation outcomes is common to all emerging research fields, but it is something that will be overcome in time. Implementation Science certainly has the potential to bridge the gap between research and practice. Providing researchers with validated tools to measure relevant outcomes will serve to further the academic field, and in turn allow for promising leaps in this instance for the use of well-established iCBT interventions in the provision of mental healthcare.

“Science rests on the adequacy of its measurement.

Poor measures provide a weak foundation for research and clinical endeavors.”

(Foster & Cone, 1995)

Dan Duffy has worked with SilverCloud as a researcher for the last 3 years, and is mainly involved with the trials that are being conducted in the United Kingdom’s IAPT Programme. His primary research interest is in the implementation of technology-delivered interventions within routine care, and will soon begin doctoral research in this area. His other research interests include the development and provision of technology-delivered intervention to minority and marginalised groups.

Find Daniel on Twitter:@dannyjduffy

See Daniel's research articles: https://orcid.org/

References for further reading

Proctor, E. K., Landsverk, J., Aarons, G., Chambers, D., Glisson, C., & Mittman, B. (2009). Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research, 36(1), 24-34.

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., ... & Hensley, M. (2011). Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65-76.

Proctor, E. K., Powell, B. J., & McMillen, J. C. (2013). Implementation strategies: recommendations for specifying and reporting. Implementation Science, 8(1), 139.

Lewis, C. C., Fischer, S., Weiner, B. J., Stanick, C., Kim, M., & Martinez, R. G. (2015). Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implementation science, 10(1), 155.